We Built an AI-Powered Wearable Mobility Solution for the Visually Impaired That Won “Prix de la Canne Blanche Award”

Key Highlights of this Success story

A World in Need of Smarter Mobility

These individually face daily challenges when commuting from navigating bustling urban centers to traversing quiet suburban streets. For many, traditional aids like white canes and guide dogs are indispensable, yet they often fall short in complex, fast-changing environments.

The Vision Behind NOA: Overcoming Complex Challenges

Seeing that Biped AI, a visionary Swiss startup, set out on a mission to transform mobility for blind and visually impaired individuals.

The idea was simple yet revolutionary: combine advanced artificial intelligence with wearable technology to create a smart harness called NOA (Navigation, Obstacles, and AI), which is beyond just another smart mobility gadget.

They created NOA to become a lifeline and smart copilot for the visually impaired and people with hemispatial neglect, providing them real-time navigation, obstacle detection, and context-aware guidance to travel confidently.

To power its advanced collision warning, they've partnered with Honda Research Institute to leverage their risk-analyzing technology, originally developed for Honda’s ADAS and autonomous driving systems.

The Challenge They Faced

The vision for the AI-powered wearable mobility solution was remarkable, solving critical challenges for the special community. They have dedicated their 3 years in doing the intensive R&D and conducted 250+ rigorous test sessions to perfect the hardware technology behind NOA.

To make it even perfect and connect its low-frequency Bluetooth hardware with a solution through which users can configure its functions and use all of its features, they needed a software platform that could make it possible with maximum accessibility.

Their core challenges included

Biped AI – an ideal co-pilot for the visually impaired that brings together innovation and accessibility, leading to confident journeys.

Our Role in the Journey

We partnered closely with them to build a comprehensive IoT-integrated software solution that would ultimately redefine how visually impaired users interact with their environment. Our contributions included:

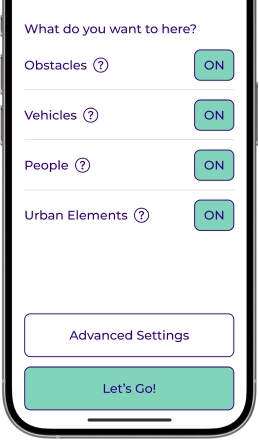

We programmed advanced AI/ML algorithms that enable the harness to interpret and respond to complex surroundings. By differentiating between indoor and outdoor settings or crowded versus isolated spaces, we made the solution to adjust the navigational assistance in real-time.

Leveraging machine learning, we made the solution predict and recommend optimal paths by analyzing real-time data and historical user behavior. This proactive approach allows the system to alert users about potential hazards before they are even encountered.

Beyond 3D spatial sounds, we explored the integration of gentle vibrations and other feedback methods to provide silent alerts, ensuring the solution is both effective and unobtrusive.

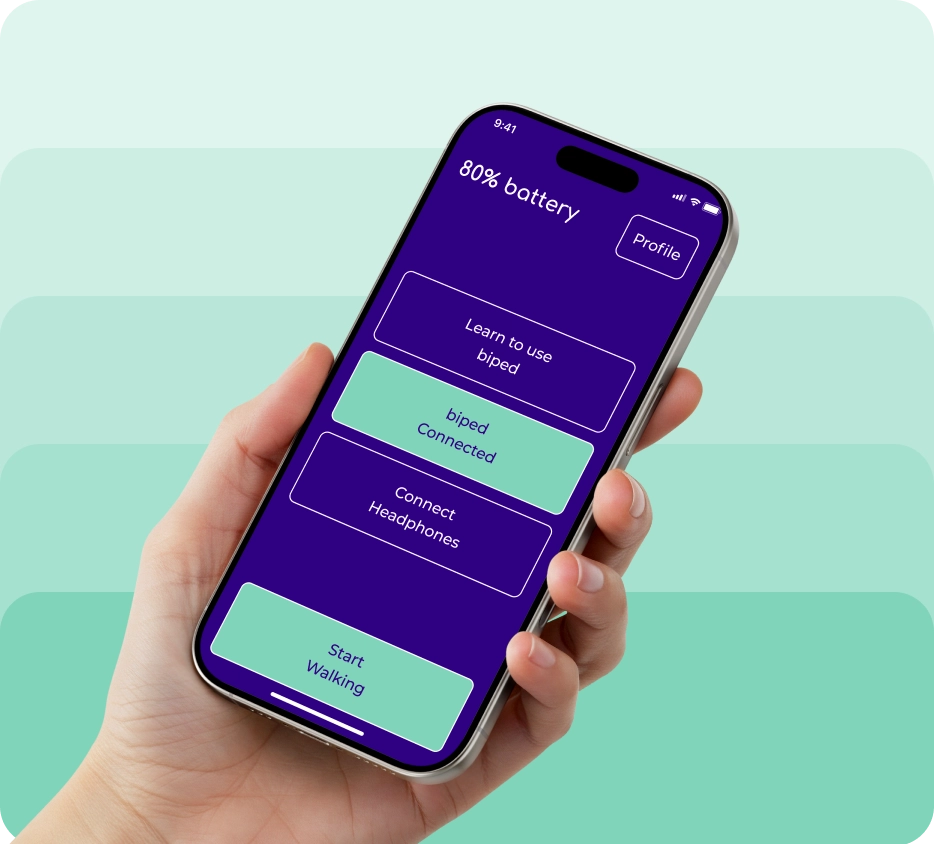

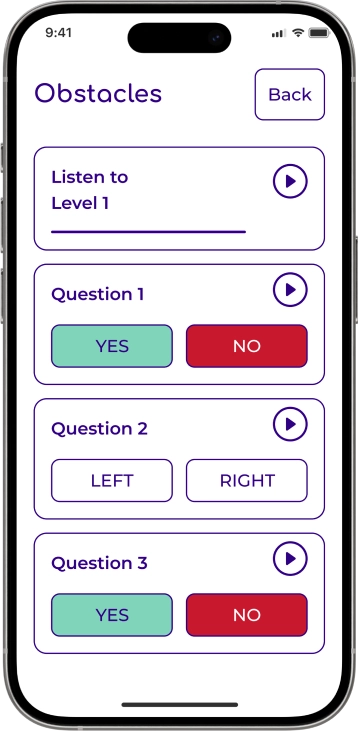

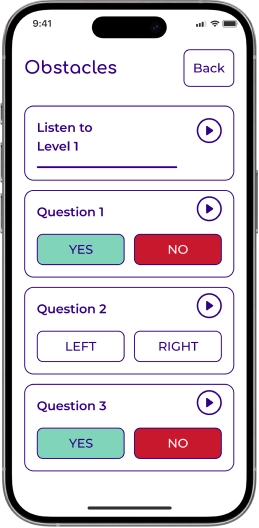

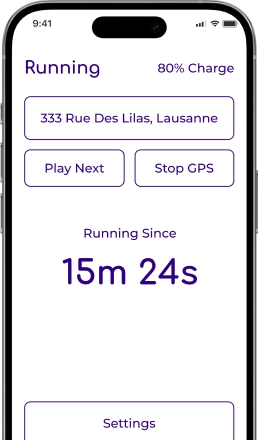

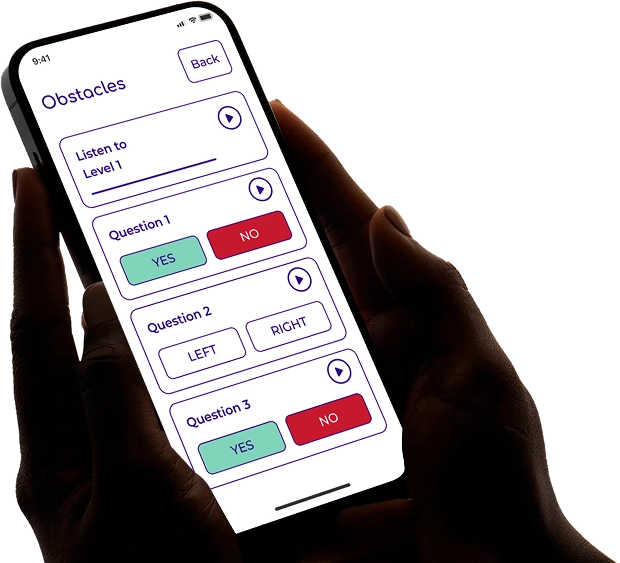

We spearheaded the development of the NOA Companion app - a critical tool that connects users to their harness via Bluetooth. This app lets users configure settings, plan routes, and access training modules, ensuring a seamless, intuitive user experience.

Our engineers optimized algorithms for speed and efficiency, ensuring immediate processing of sensor data. Our computer vision specialists enhanced object recognition capabilities, enabling the device to detect everything from tree branches to vehicles with exceptional accuracy.

We ensured that the app meets the highest level of accessibility that reflects our commitment to inclusive design. Our interface features clear, high-contrast visual elements, logical navigation structures, and robust support for screen readers. Additionally, the app incorporates voice command functionality and interactive voice assistance, allowing users to operate the app hands-free and navigate through its features with ease.

This combination of visual, auditory, and haptic feedback ensures that visually impaired users can interact with the system confidently and efficiently.

Real-World Impact

Collectively, users of NOA have walked over 3,000 KM, experiencing enhanced safety and independence in their daily lives.

Strategic Collaborations

Continuous Innovation

Want us to leverage our expertise in advanced AI and software development in your project to achieve impact and success like this customer?